11

Classification

Softmax Regression

Theory

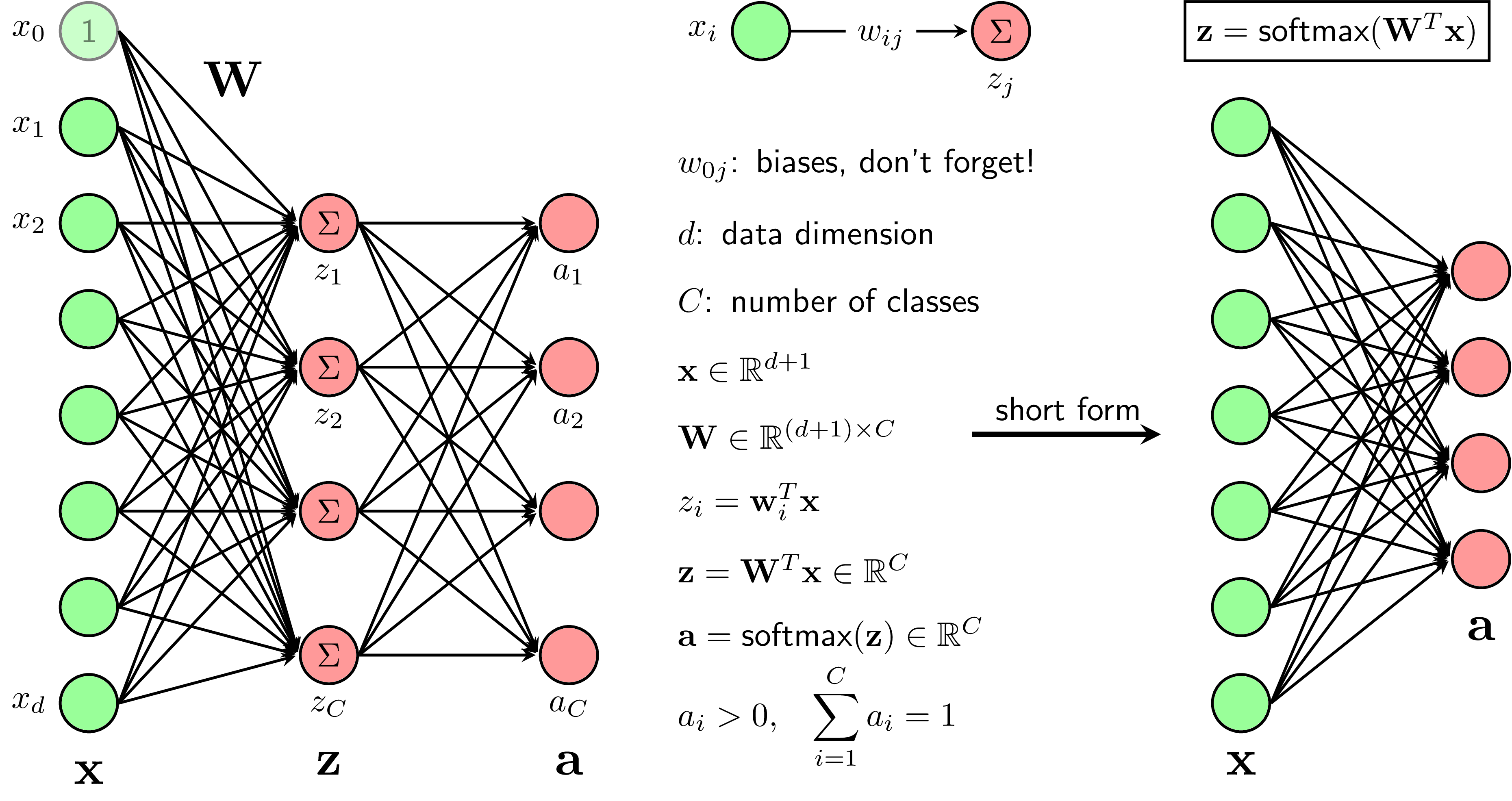

Softmax Regression (Multinomial Logistic Regression) extends binary logistic regression to handle multiple classes. It outputs a probability distribution over all classes. The softmax function ensures all outputs sum to 1, making them interpretable as probabilities.

Visualization

Mathematical Formulation

Softmax Function: P(y=k|x) = exp(zₖ) / Σⱼ exp(zⱼ) where zₖ = wₖᵀx + bₖ for class k Cross-Entropy Loss: L = -Σᵢ Σₖ yᵢₖ · log(ŷᵢₖ)

Code Example

import numpy as np

from sklearn.linear_model import LogisticRegression

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

# Load multi-class dataset

iris = load_iris()

X_train, X_test, y_train, y_test = train_test_split(

iris.data, iris.target, test_size=0.2, random_state=42

)

# Train softmax regression

model = LogisticRegression(multi_class='multinomial',

max_iter=1000)

model.fit(X_train, y_train)

# Evaluate

accuracy = model.score(X_test, y_test)

print(f"Accuracy: {accuracy:.3f}")

# Predict probabilities

probas = model.predict_proba(X_test[:3])

print("\nClass probabilities:")

print(probas)